Using AI is not cheating. It is a way to become more productive. You pay your employees because they perform tasks that create value for the organization. So it makes sense to let them use the best tools available to do their jobs.

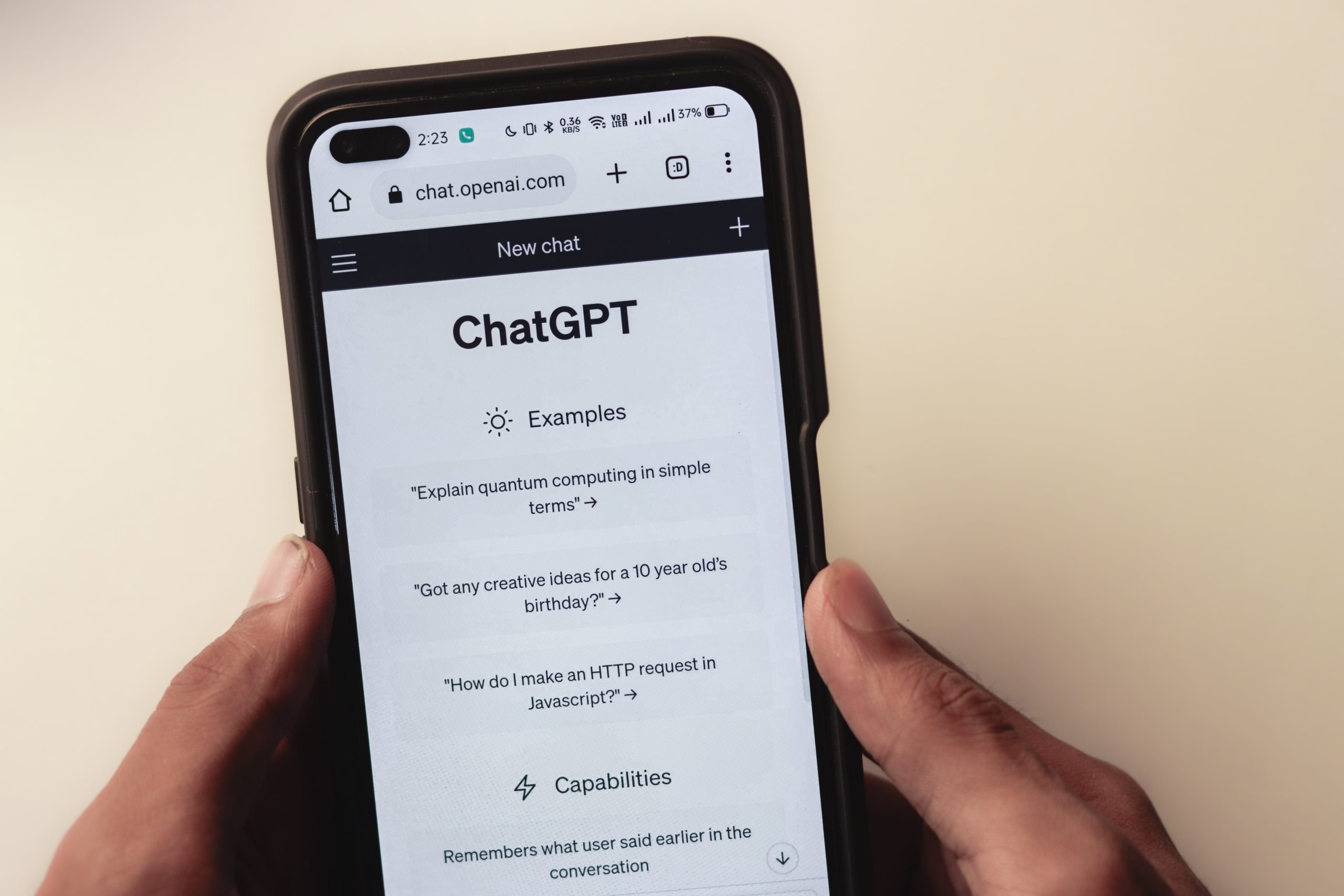

Just like some schools are trying to prevent students from using AI, some companies are trying to outlaw AI. It won’t work. Research shows that 47% of people who used AI tools experienced increased job satisfaction, and 78% were more productive. You can’t fight such dramatic numbers with a blanket prohibition. If you try, your employees will use AI on their phones or in an incognito browser session while working from home.

By all means create rules about how and where employees can use AI, and explain them thoroughly. But trying to ban AI is futile.