There are two ways to use Large Language Models. One works well, the other much less well. Over the holidays, I’ve been talking to a lot of family and friends about AI, and it turns out that many people conflate these two approaches.

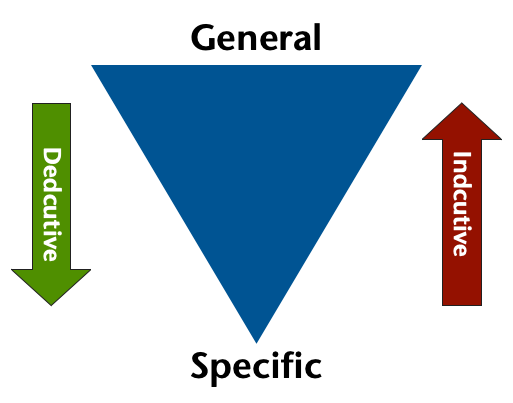

The way that works well is to use LLMs deductively. That means starting with a lot of text and distilling some essence or knowledge from it. Because you are only asking the AI to create something from a text you have given it, it has much less room to run off on a tangent, making stuff up. At the same time, it can show off its superhuman ability to process large amounts of text. In an IT context, this is when you give it dozens of interlinked files and ask it to explain or find inconsistencies or bugs.

The way that doesn’t work well is using LLMs inductively. That is when you ask it to produce text based on a short prompt and its large statistical model. This allows it to confabulate freely, and the results are hit-or-miss. In an IT context, this is where you ask it to write code. Sometimes it works, often it doesn’t.

Whenever you discuss LLMs with someone, set the stage by defining the inductive/deductive difference. If people already know, no harm done. If they don’t have this frame of reference, establishing it makes for much better conversations.